Microsoft Releases VibeVoice-ASR: A Unified Speech-to-Text Model Designed to Handle 60-Minute Long-Form Audio in a Single Pass

Microsoft has released VibeVoice-ASR as part of the VibeVoice family of open source frontier voice AI models. VibeVoice-ASR is described as a unified speech-to-text model that can handle 60-minute long-form audio in a single pass and output structured transcriptions that encode Who, When, and What, with support for Customized Hotwords.

VibeVoice sits in a single repository that hosts Text-to-Speech, real time TTS, and Automatic Speech Recognition models under an MIT license. VibeVoice uses continuous speech tokenizers that run at 7.5 Hz and a next-token diffusion framework where a Large Language Model reasons over text and dialogue and a diffusion head generates acoustic detail. This framework is mainly documented for TTS, but it defines the overall design context in which VibeVoice-ASR lives.

Long form ASR with a single global context

Unlike conventional ASR (Automatic Speech Recognition) systems that first cut audio into short segments and then run diarization and alignment as separate components, VibeVoice-ASR is designed to accept up to 60 minutes of continuous audio input within a 64K token length budget. The model keeps one global representation of the full session. This means the model can maintain speaker identity and topic context across the entire hour instead of resetting every few seconds.

60-minute Single-Pass Processing

The first key feature is that many conventional ASR systems process long audio by cutting it into short segments, which can lose global context. VibeVoice-ASR instead takes up to 60 minutes of continuous audio within a 64K token window so it can maintain consistent speaker tracking and semantic context across the entire recording.

This is important for tasks like meeting transcription, lectures, and long support calls. A single pass over the complete sequence simplifies the pipeline. There is no need to implement custom logic to merge partial hypotheses or repair speaker labels at boundaries between audio chunks.

Customized Hotwords for domain accuracy

Customized Hotwords are the second key feature. Users can provide hotwords such as product names, organization names, technical terms, or background context. The model uses these hotwords to guide the recognition process.

This allows you to bias decoding toward the correct spelling and pronunciation for domain specific tokens without retraining the model. For example, a dev-user can pass internal project names or customer specific terms at inference time. This is useful when deploying the same base model across several products that share similar acoustic conditions but very different vocabularies.

Microsoft also ships a finetuning-asr directory with LoRA based fine tuning scripts for VibeVoice-ASR. Together, hotwords and LoRA fine tuning give a path for both light weight adaptation and deeper domain specialization.

Rich Transcription, diarization, and timing

The third feature is Rich Transcription with Who, When, and What. The model jointly performs ASR, diarization, and timestamping, and returns a structured output that indicates who said what and when.

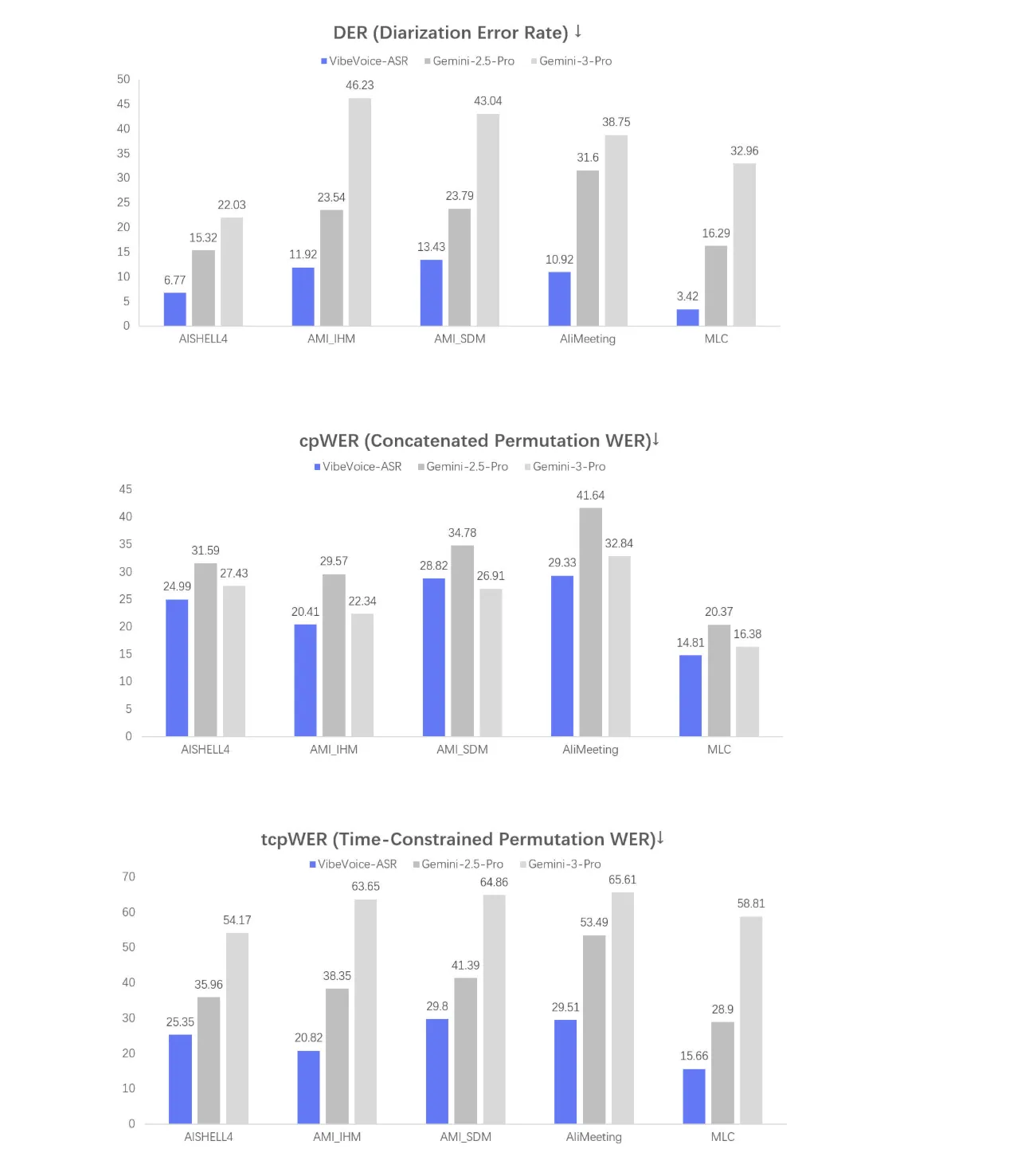

See below the three evaluation figures named DER, cpWER, and tcpWER.

- DER is Diarization Error Rate, it measures how well the model assigns speech segments to the correct speaker

- cpWER and tcpWER are word error rate metrics computed under conversational settings

These graphs summarize how well the model performs on multi speaker long form data, which is the primary target setting for this ASR system.

The structured output format is well suited for downstream processing like speaker specific summarization, action item extraction, or analytics dashboards. Since segments, speakers, and timestamps already come from a single model, downstream code can treat the transcript as a time aligned event log.

Key Takeaways

- VibeVoice-ASR is a unified speech to text model that handles 60 minute long form audio in a single pass within a 64K token context.

- The model jointly performs ASR, diarization, and timestamping so it outputs structured transcripts that encode Who, When, and What in a single inference step.

- Customized Hotwords let users inject domain specific terms such as product names or technical jargon to improve recognition accuracy without retraining the model.

- Evaluation with DER, cpWER, and tcpWER focuses on multi speaker conversational scenarios which aligns the model with meetings, lectures, and long calls.

- VibeVoice-ASR is released in the VibeVoice open source stack under MIT license with official weights, fine tuning scripts, and an online Playground for experimentation.

Check out the Model Weights, Repo and Playground. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.

The post Microsoft Releases VibeVoice-ASR: A Unified Speech-to-Text Model Designed to Handle 60-Minute Long-Form Audio in a Single Pass appeared first on MarkTechPost.

from MarkTechPost https://ift.tt/zYJPHIo

via IFTTT

Comments

Post a Comment